- 23rd Jan, 2025

- Aarav P.

Three Ways of Object Detection on Android

16th Jul, 2021 | Kinjal D.

- Software Development

Object detection on Android has evolved significantly over the years, and with the advent of powerful machine learning models and improved hardware capabilities, developers now have access to advanced techniques for building robust object detection applications.

This blog is associated with object detection and the use of TensorFlow, TensorFlow Lite (CPU & GPU), and ML Kit on Android devices. It facilitates us in detecting, locating, and monitoring an object from a photo or a video along with real-time item detection.

What is Object Detection?

Object detection is a computer vision task that involves identifying and locating objects within an image or video frame.

Unlike image classification, where the goal is to assign a label to an entire image, object detection goes a step further by identifying and delineating the specific objects present in the visual data.

This technology plays a crucial role in various applications, ranging from autonomous vehicles and surveillance systems to augmented reality and image search.

What is TensorFlow?

TensorFlow is an open-source end-to-end platform for creating Machine Learning applications. It is a symbolic math library that uses dataflow and differentiable programming to perform various tasks focused on the training and inference of deep neural networks. By using it we can create machine learning applications using various tools, libraries, and community resources.

What is TensorFlow Lite?

TensorFlow Lite is TensorFlow’s lightweight solution for mobile and embedded devices. It let you run machine-learned models on mobile devices with low latency, so you can take advantage of them to perform classification, regression, and other things.

What is an ML Kit?

ML Kit is a cross-platform mobile SDK used to implement ML techniques in mobile applications by bringing Google's ML technologies, such as the Mobile Vision, and TensorFlow Lite, together in a single SDK. It offers both on-device and cloud-based APIs.

Three Approaches we are going to use for Object Detection:

-

TensorFlow Object Detection: The TensorFlow object detection API is a framework for creating a deep learning network that solves object detection problems. We will use the protobuf model (.pb) TensorFlow model for the same.

-

TensorFlow Lite Object Detection: TensorFlow Lite is an open-source deep learning framework to run TensorFlow models on-device. We can detect objects from camera feeds with MobileNet models.

-

ML Kit Object Detection API: With ML Kit's on-device Object Detection and Tracking API, you can detect and track objects in an image or live camera feed.

How to use the Camera of an Android Device?

First of all, we need to add camera and internet permissions in the AndroidManifest.xml file along with uses-feature.

<manifest>

<uses-permission android:name="android.permission.CAMERA" />

<uses-permission android:name="android.permission.INTERNET"/>

<uses-feature

android:name="android.hardware.camera"

android:required="true" />

<uses-feature android:name="android.hardware.camera.any" />

<uses-feature

android:name="android.hardware.camera.autofocus"

android:required="false" />

...

</manifest>

For all three concepts we will use the CameraX library of Android.

Uses-case based approach of CameraX:

-

Preview: To get the real-time image on the display.

-

Image Analysis: You will get frame by frame luminosity of the image. It will help you send the data to ML Kit for machine learning and related image analysis tools, such as object detection.

-

Image capture: You can capture the image in high quality and save it.

For using CameraX in Android applications you need to add the following dependency in build.gradle (Module: app)

dependencies {

...

//cameraX dependency

def camerax_version = "1.1.0-alpha05"

// CameraX core library using camera2 implementation

implementation "androidx.camera:camera-camera2:$camerax_version"

// CameraX Lifecycle Library

implementation "androidx.camera:camera-lifecycle:$camerax_version"

// CameraX View class

implementation "androidx.camera:camera-view:1.0.0-alpha20"

...

}

Object Detection using TensorFlow :

Why use TensorFlow?

The important motive is that for the use of protobuf models it's obligatory to apply TensorFlow Framework rather than TensorFLow Lite.

For using TensorFlow in Android applications you need to add following the dependency in build.gradle (Module: app)

dependencies {

...

implementation 'org.tensorflow:tensorflow-android:1.13.1'

...

}

Setting Up TensorFlow

There are three steps for setting up TensorFlow in our project:

- Load your TensorFlow library in a separate class or in an activity where you want to perform object detection.

...

static {

System.loadLibrary("tensorflow_inference");

}

...

Before creating an instance of the TensorFlowInferenceInterface class we need to create some constants. They are as following:

-

MODEL_FILE - This variable stores the path of the .pb model and it must be stored in the assets folder

-

INPUT_NODE - Name of input node in our model

-

OUTPUT_NODES - An array of output nodes (It varies with the .pb model)

-

OUTPUT_NODE - Name of the output node in our model INPUT_SIZE - Size of the input

2 . Create an instance of TensorFlowInferenceInterface which is a class responsible for making an inference, that is, a prediction.

private TensorFlowInferenceInterface inferenceInterface;

//inside onCreate method

@Override

protected void onCreate(@Nullable Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

...

inferenceInterface = new TensorFlowInferenceInterface(getAssets(), MODEL_FILE);

...

}

3.Now we create a function that takes bitmap as an input and stores the result of the model into the output array.

Here, a bitmap is converted from frames that are extracted from live camera feed or static video.

private void runTensorFlowOnBitmap(Bitmap bitmap) {

byte[] pixels = getBytes(bitmap);

// Copy the input data into TensorFlow.

inferenceInterface.feed(INPUT_NODE, pixels, INPUT_SIZE);

// Run the inference call.

inferenceInterface.run(OUTPUT_NODES);

// Copy the output Tensor back into the output array.

// result is the array which stores output

inferenceInterface.fetch(OUTPUT_NODE, result);

}

So this output array contains all the detected objects from a particular image which can be used to draw boxes over the detected object. To draw, utilise the Canvas class from the android.graphics package, which creates a rectangle on the overlay layout using coordinates for exact positioning.

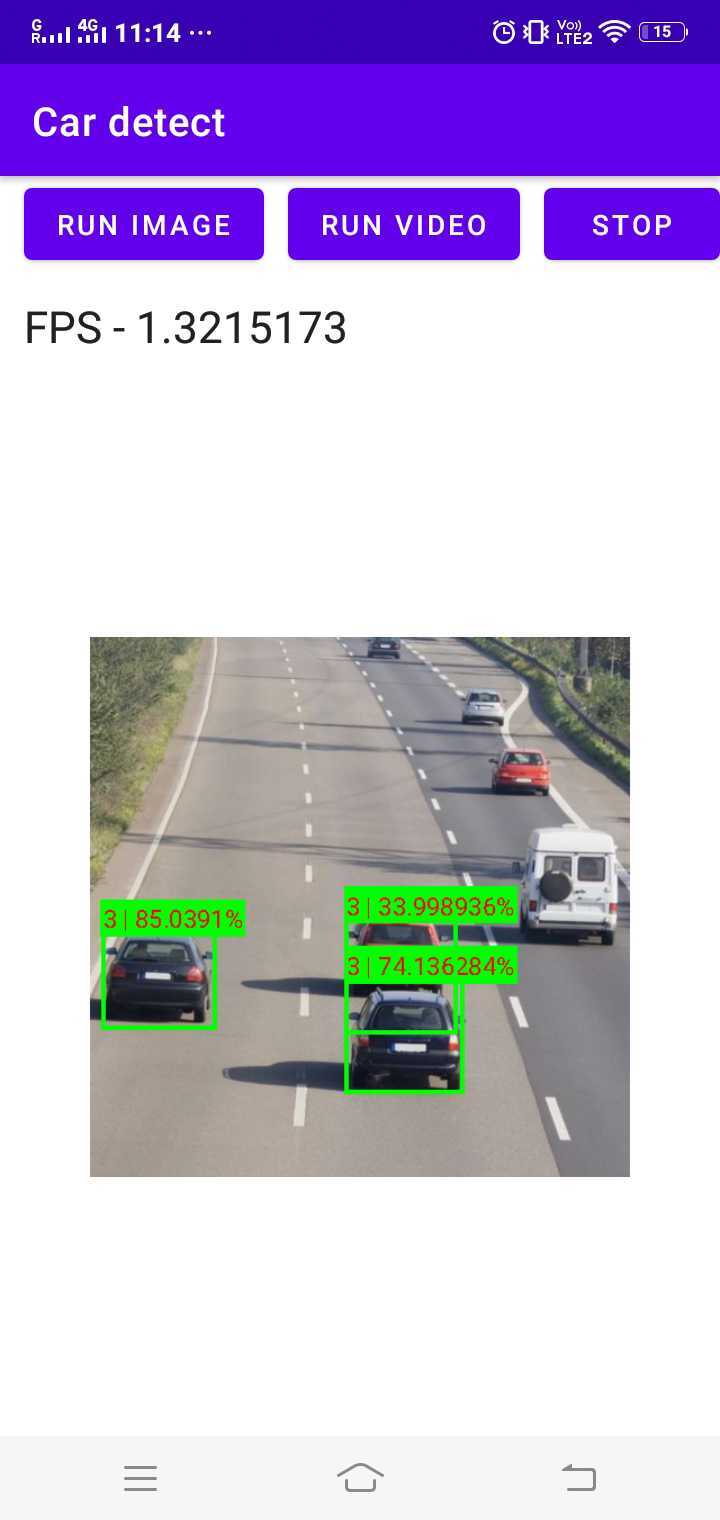

Result:

Object Detection using TensorFlow Lite :

Why use TensorFlow Lite?

Applications developed on TensorFlow Lite will have better performance and less binary file size than TensorFlow mobile.TensorFlow Lite models have faster inference time and require less processing power, which provides faster performance in real-time applications.

TensorFlow Lite supports lots of hardware accelerators such as CPU, GPU, NNAPI.

There are two ways we can detect Objects using TensorFlow Lite:

- TensorFLow Lite CPU

- TensorFlow Lite GPU Delegate

For using TensorFlow Lite in Android applications you need to add the following dependencies inbuild.gradle (Module: app)

android {

...

aaptOptions {

noCompress "tflite"

}

...

}

dependencies{

...

// TFLite

implementation 'org.tensorflow:tensorflow-lite-task-vision:0.2.0'

// TFLite gpu

implementation('org.tensorflow:tensorflow-lite:2.5.0') { changing = true }

implementation('org.tensorflow:tensorflow-lite-gpu:2.2.0') { changing = true }

implementation('org.tensorflow:tensorflow-lite-support:0.2.0') { changing = true }

...

}

TensorFlow Lite on CPU

- First, we need to create an instance of class ObjectDetector which is a class responsible for detecting objects from images.

import org.tensorflow.lite.support.image.TensorImage;

import org.tensorflow.lite.task.vision.detector.Detection;

import org.tensorflow.lite.task.vision.detector.ObjectDetector;

public class MainActivity extends AppCompatActivity {

...

private ObjectDetector detector = null;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

//initialize detector

initializeDetector();

...

}

private void initializeDetector() {

ObjectDetector.ObjectDetectorOptions options = ObjectDetector.ObjectDetectorOptions.builder()

.setMaxResults(5)

.build();

try {

detector = ObjectDetector.createFromFileAndOptions(

this,

MODEL_FILE,

options

);

} catch (IOException e) {

e.printStackTrace();

}

}

...

}

Here, variable MODEL_FILE contains the **.tflite ** model name.

- Now we create a function to run object detection on a particular image. For that first we need to convert the Bitmap image to TensorImage and then call the detect() method of ObjectDetector for detecting objects.

private void runObjectDetection(Bitmap bitmap) {

//Create TFLite's TensorImage object

TensorImage image = TensorImage.fromBitmap(bitmap);

// Feed given image to the detector

List<Detection> results = detector.detect(image);

if (!results.isEmpty()) {

//results list contain list of detected objects

Log.e(TAG,"Objects = " + results);

}

}

Here, the results list contains all the detected objects from a particular image which can be used to draw boxes over the detected object using the Canvas class.

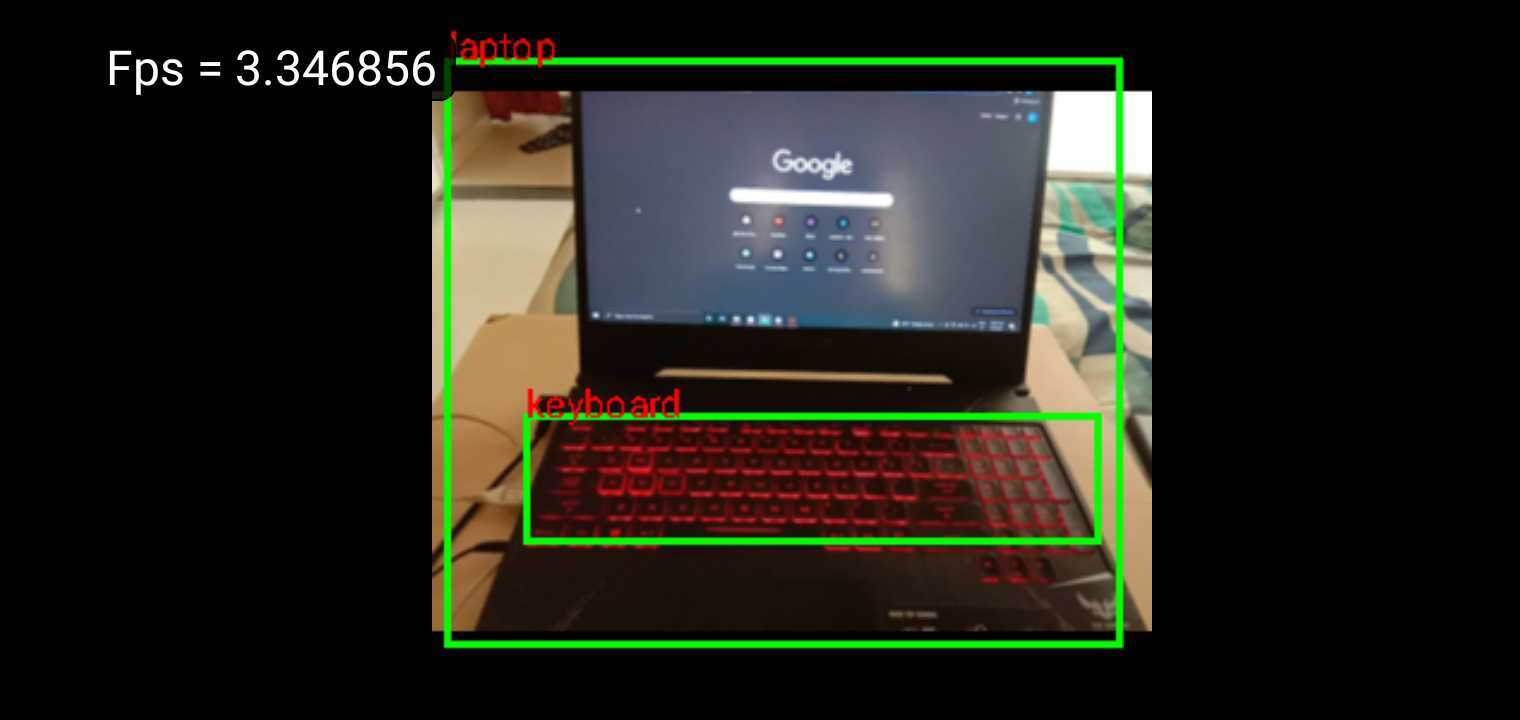

Result:

TensorFlow Lite on GPU

There are many advantages of using GPU acceleration such as:

-

Speed - GPUs are designed to have high throughput for massively parallelizable workloads.

-

Accuracy - GPUs perform computation with 16-bit or 32-bit floating point numbers and (unlike the CPUs) do not require quantization for optimal performance.

-

Energy efficiency - A GPU carries out computations in a very efficient and optimized way, consuming less power and generating less heat than the same task run on a CPU.

Now, we will learn about how to use the GPU backend using the TensorFlow Lite delegate APIs on Android.

Steps for GPU Implementation:

- First, we need to create an instance of the Interpreter class, which is responsible for object detection.

import org.tensorflow.lite.Interpreter;

import org.tensorflow.lite.gpu.GpuDelegate;

public class CameraActivity extends AppCompatActivity {

private Interpreter interpreter;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

//initialize interpreter

initializeGpu();

}

private void initializeGpu() {

// Initialize interpreter with GPU delegate.

GpuDelegate delegate = new GpuDelegate();

Interpreter.Options optionss = (new Interpreter.Options()).addDelegate(delegate);

try {

interpreter = new Interpreter(loadModelFile(getAssets(), MODEL_FILE), optionss);

} catch (IOException e) {

e.printStackTrace();

}

}

//Load model for gpu delegate

private MappedByteBuffer loadModelFile(AssetManager assetManager, String modelPath) throws IOException {

// use to get description of file

AssetFileDescriptor fileDescriptor = assetManager.openFd(modelPath);

FileInputStream inputStream = new FileInputStream(fileDescriptor.getFileDescriptor());

FileChannel fileChannel = inputStream.getChannel();

long startOffset = fileDescriptor.getStartOffset();

long declaredLength = fileDescriptor.getDeclaredLength();

return fileChannel.map(FileChannel.MapMode.READ_ONLY, startOffset, declaredLength);

}

}

Here, variable MODEL_FILE contains the **.tflite ** model name. loadModelFile() method is used to load a model from assets folder in Android.

- Now we need to create a function that provides output with an array of detected objects.

private void runObjectDetection(Bitmap bitmap){

//Create TFLite's TensorImage object

TensorImage image = TensorImage.fromBitmap(bitmap);

// Run inference

// runForMultipleInputsOutputs contains two arrgument (inputs,outputs)

// inputs is object of input

interpreter.runForMultipleInputsOutputs(new Object[]{image.getBuffer()}, map_of_indices_to_outputs);

Log.e(TAG, "Output : " + map_of_indices_to_outputs);

}

Our tflite model returns multiple outputs hence we need to use the runForMultipleInputsOutputs method with a map of arrays to store the results.

private float[][][] boxes = new float[1][10][4];

private float[][] classes = new float[1][10];

private float[][] scores = new float[1][10];

private float[] numOf = new float[1];

Map map_of_indices_to_outputs = new HashMap<Integer, Object>();

map_of_indices_to_outputs.put(0, boxes);

map_of_indices_to_outputs.put(1, classes);

map_of_indices_to_outputs.put(2, scores);

map_of_indices_to_outputs.put(3, numOf);

- Draw the bounding boxes on the bitmap with the coordinates we got from the results, we can also display the object names which are defined as classes in the output array.

Result:

Object Detection using ML Kit :

ML Kit is a part of Google’s Vision APIs, which has widely used ML models including Object detection. You can directly use ML kit’s default model for object detection as described here or use your custom tflite model, which we are going to implement here.

Add the following dependency in build.gradle (Module: app)

dependencies{

...

// mlkit object detection

// using custom model

implementation 'com.google.mlkit:object-detection-custom:16.3.3'

...

}

Steps to Configure ML Kit in Android:

- Configure the Object Detector

private ObjectDetector objectDetector;

LocalModel localModel = new LocalModel.Builder()

.setAssetFilePath(MODEL_FILE)

.build();

private void initializeObjectDetector(LocalModel localModel) {

CustomObjectDetectorOptions options = new CustomObjectDetectorOptions.Builder(localModel)

.setDetectorMode(CustomObjectDetectorOptions.STREAM_MODE) //Detecting object from live camera feed

.enableClassification() // Optional

.setClassificationConfidenceThreshold(0.5f)

.setMaxPerObjectLabelCount(3)

.build();

objectDetector = ObjectDetection.getClient(options);

}

Here, MODEL_FILE contains the custom model name stored in the assets folder.

- Now to detect an object we need to pass an image to the ObjectDetector instance’s process( ) method.

private detectObjects(InputImage image) {

objectDetector.process(image)

.addOnSuccessListener(

new OnSuccessListener<List<DetectedObject>>() {

@Override

public void onSuccess(List<DetectedObject> detectedObjects) {

// Task completed successfully

// ...

}

})

.addOnFailureListener(

new OnFailureListener() {

@Override

public void onFailure(@NonNull Exception e) {

// Task failed with an exception

// ...

}

});

}

If the process() method succeeds, a list of DetectedObjects is passed to the success listener.

Here, each detected object contains the following properties :

-

Bounding box - A Rect that provides a position of the object in the image.

-

Tracking ID - An integer that identifies the object across images. Null in SINGLE_IMAGE_MODE.

-

Labels - It contains label description, label index, label confidence.

To sum up, these properties help to label every object and to draw bounding boxes over the detected objects.

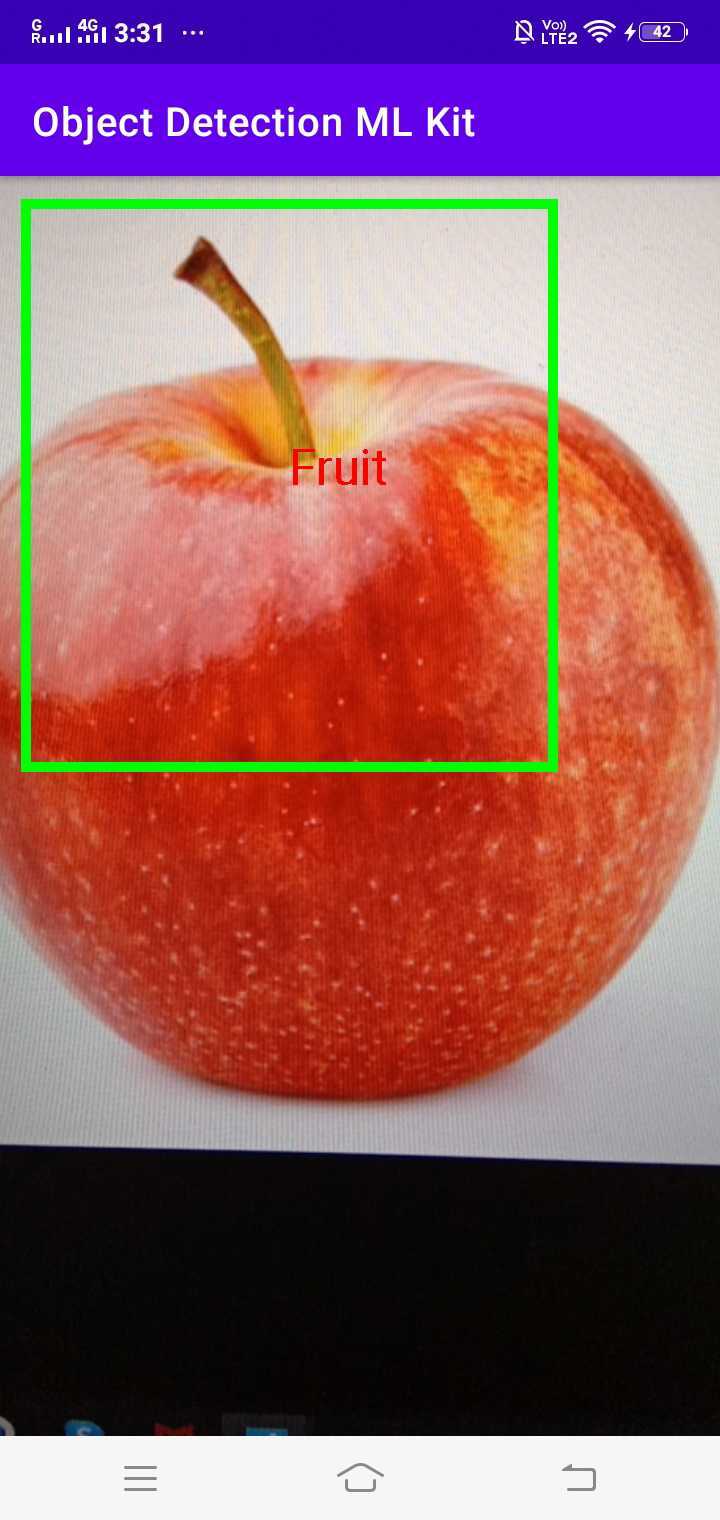

Result:

And that’s about it! These are the ways we can implement Object Detection on Android mobile devices using three different ML libraries: TensorFlow, TensorFlow Lite, and ML Kit.

Conclusion

Object detection on Android has reached new heights with the availability of powerful frameworks like TensorFlow and ML Kit. Whether developers prioritize customization, model size, or ease of integration, there is a suitable solution for every use case.

TensorFlow Object Detection provides the flexibility of building and fine-tuning custom models, making it ideal for applications with specific requirements.

TensorFlow Lite Object Detection focuses on efficient on-device processing, making it well-suited for resource-constrained environments.

ML Kit Object Detection API strikes a balance between simplicity and functionality, offering an easy-to-use solution for developers who want to implement object detection without diving into the complexities of model training.

Ultimately, the choice between these frameworks depends on the specific needs of the Android application, including considerations such as model size, speed, customization requirements, and integration complexity.

As the field of object detection continues to evolve, these frameworks will likely see further enhancements, providing developers with even more tools to create innovative and intelligent applications for Android devices.

References:

More blogs in "Software Development"

- 1st Nov, 2024

- Arjun S.

How Big Data In Manufacturing Helps Us Make Better Decisions

- 20th Dec, 2024

- Vikram M.

Cost to Build an AI Trading App Like Thinkorswim: 2025

Join our Newsletter

Get insights on the latest trends in technology and industry, delivered straight to your inbox.